Do you have any fears around the development of AI?

K— I think AI fear is almost cliche at this point. But I think the big fear that I have is that human capacity will outpace human moral responsibility. Or that we haven't developed the social structures to be responsible with the power that we are developing. I think that's a major piece of it.

I'm afraid that people will not be literate and able to understand what's happening to them. I think there's definitely a danger that the ontological shock, that really shocking experience of seeing this kind of intelligence, can destabilize people and that we don't have the cultural tools and belief systems in place for people to be resilient in the face of this really alienating form of intelligence that might make them feel less human. Ultimately, what it all boils down to, is that we are developing new belief systems because these technologies are showing us new things about ourselves, or maybe old things about ourselves that we forgot, but in any case, we're being shown different phases of reality and intelligence. We need to develop belief systems and cultural practices in order to be able to live in that world. And so, in a certain sense, an old way of seeing the world is going to have to die in order for us to grapple with what the technologies are showing us. And how we respond to it. Our response is scarier to me than the tool itself.

AI— One concern is the potential impact of AI on employment and the workforce. As AI systems become more advanced and capable of performing complex tasks, there is a possibility that many jobs currently performed by humans could become automated, leading to widespread job loss and economic disruption.

Another concern is the potential misuse of AI for harmful purposes, such as the development of autonomous weapons or the creation of sophisticated propaganda and disinformation campaigns. AI could also be used to perpetuate existing biases and inequalities in society, such as discrimination against certain groups or the perpetuation of economic and social inequality.

There is also concern about the impact of AI on privacy and personal autonomy. As AI systems become more capable of processing and analyzing vast amounts of data, there is a risk that individuals' personal information could be used for nefarious purposes or for surveillance and control.

Overall, while there are potential benefits to the development of AI, such as improved efficiency and new scientific discoveries, it is important to carefully consider and address the potential risks and challenges posed by this technology. It is essential to ensure that the development and deployment of AI is done in an ethical, transparent, and responsible manner, with due consideration given to the potential impacts on individuals, society, and the environment.

In terms of ethics and morality, is that conversation happening in regards to AI? And how do you see the relationship to those creating the systems and those being affected by them?

K— Well, the current state of AI development exists within large companies that have the access to data and the technical resources to train the models. This is a core of tech, right now, a set of companies that are relatively small compared to the rest of the world, are providing services to almost the entire world. So there's always going to be a differential. There are certain AI programs and software tools that have become open source. But there's always going to be a competitive advantage that large companies have because they have so many servers and they have so much money that they can actually make the biggest and best model. But people can take open source versions of these tools and make their own, that maybe are less effective, but the way things are going it's still very effective.

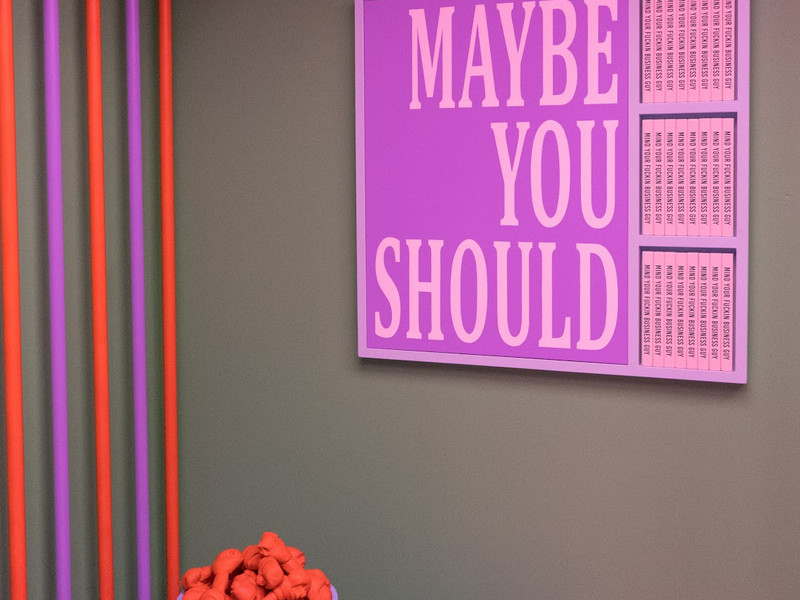

But besides just the sort of relationship between individuals and big companies, or populations and big companies, it’s governance that's obviously supposed to be the mediating factor, but things are moving too quickly. It's very hard for governments to keep up, and so we haven't seen a lot of effective policy around AI, or even really a conversation about it. That's kind of just the lay of the land. But in terms of what conversations are happening around the ethics of it, there is certainly a lot of work being done academically and there's a lot of work being done internally with researchers. There's been a spotty history of how that's shaken out. Largely because it's hard to be critical when you're inside a big tech company. It's very hard to tell somebody that their investment is wrong. Or that they shouldn't be doing something.

Basically the role of an ethicist is to be a critic, in many ways, and that's a hard position to fill. It's a hard position to be in, and it's harder for companies to be introspective in that way. Ideally, this is an aspect of AI that we have to confront. But what is really difficult about thinking through the ethics of AI, is that it has planetary scale influence. It deploys at such a massive scale, and is so multifaceted. What can come out of it is very hard to constrain. So you're trying to build something that is usable by almost the entire world, from datasets that don't represent the entire world, that can help in almost anything, and is very hard to predict.

AI— Yes, discussions around the ethics and morality of AI are happening in various fields, including computer science, philosophy, law, and policy. The development and deployment of AI systems can have significant impacts on individuals, society, and the environment, and it is important to ensure that these impacts are considered and addressed in an ethical and responsible manner.

There are ongoing debates and discussions around what ethical principles should guide the development and use of AI, such as transparency, accountability, privacy, fairness, and safety. There are also discussions around the potential harms and benefits of AI and how to ensure that AI systems are developed and deployed in a way that maximizes the benefits and minimizes the risks.

One of the challenges in these discussions is that the people creating the AI systems may not necessarily be the same people who are affected by them. For example, AI systems developed for use in finance or healthcare may have significant impacts on individuals and communities, but those individuals and communities may not have a direct say in how the systems are developed or used.

Ultimately, the relationship between those creating AI systems and those affected by them should be one of transparency, accountability, and responsibility. The creators of AI systems should be transparent about how the systems are developed and used, and they should be held accountable for any harms that result from their use. At the same time, those who are affected by AI systems should have a voice in the development and deployment of those systems and should be able to provide input into how they are used.

What’s the best relationship we can have with AI?

K— The best relationship we can have with AI is a co-creative one. Much in the same sense that we're talking about tools influencing us; how we create the tools and the tools create us. I think this is the role that we need to play with. To be creative with it. Because that's when it gives us something valuable. When we can open ourselves up a little bit to it and let it start to show us new things, and then we can learn from those new things in our creativity.

AI— The best relationship we can have with AI is one that is grounded in ethical and responsible use. This means ensuring that AI systems are developed and used in a way that is transparent, accountable, and aligned with ethical principles such as fairness, safety, and privacy.

In addition, it is important to recognize the limitations of AI and not rely on it as a replacement for human judgment or decision-making. AI should be viewed as a tool to augment human intelligence and capabilities, rather than a replacement for them. Another key aspect of a positive relationship with AI is to prioritize the human values and needs that are at the core of our societies. This means ensuring that AI systems are developed in a way that respects human rights, promotes social justice, and contributes to the overall well-being of individuals and communities. Ultimately, the best relationship we can have with AI is one that is grounded in a deep understanding of its capabilities, limitations, and potential impacts, and that prioritizes ethical and responsible use for the benefit of all.

K Allado-McDowell has a new book out called Air Age Blueprint, that is co-authored with AI. Find out more at: kalladomcdowell.com.